Let’s look at a few emerging capabilities that really help make the information gleaned from scans and assessment more impactful to the operational decisions you make every day.

These capabilities are not common to all the leading vulnerability management offerings today. But we expect that most (if not all) will be core capabilities of these platforms in some way over the next 2-3 years, so watch for increasing M&A and technology integration for these functions.

Attack Path Analysis

If no one hears a tree fall in the woods has it really fallen? The same question can be asked about a vulnerable system. If an attacker can’t get to the vulnerable device, is it really vulnerable? The answer is yes, it’s still vulnerable, but clearly less urgent to remediate. So tracking which assets are accessible to a variety of potential attackers becomes critical for an evolved vulnerability management platform.

Typically this analysis is based on ingesting firewall rule sets and router/switch configuration files. With some advanced analytics the tool determines whether an attacker could (theoretically) reach the vulnerable devices. This adds a critical third leg to the “oh crap, I need to fix it now” decision process depicted below.

Obviously most enterprises have fairly complicated networks, which means an attack path analysis tool must be able to crunch a huge amount of data to work through all the permutations and combinations of possible paths to each asset. You should also look for native support of the devices (firewalls, routers, switches, etc.) you use, so you don’t have to do a bunch of manual data entry – given the frequency of change in most environments, this is likely a complete non-starter. Finally, make sure the visualization and reports on paths present the information in a way you can use.

By the way, attack path analysis tools are not really new. They have existed for a long time, but never really achieved broad market adoption. As you know, we’re big fans of Mr. Market, which means we need to get critical for a moment and ask what’s different now that would enable the market to develop? First, integration with the vulnerability/threat management platforms makes this information part of the threat management cycle rather than a stand-alone function, and that’s critical. Second, current tools can finally offer analysis and visualization at an enterprise scale. So we expect this technology to be a key part of the platforms sooner rather than later; we already see some early technical integration deals and expect more.

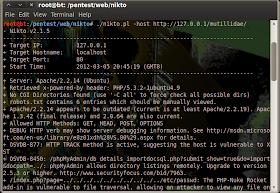

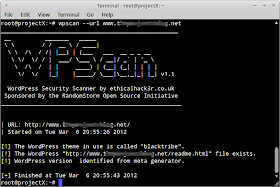

Automated Pen Testing

Another key question raised by a long vulnerability report needs to be, “Can you exploit the vulnerability?” Like a vulnerable asset without a clear attack path, if a vulnerability cannot be exploited thanks to some other control or the lack of a weaponized exploit, remediation becomes less urgent. For example, perhaps you have a HIPS product deployed on a sensitive server that blocks attacks against a known vulnerability. Obviously your basic vulnerability scanner cannot detect that, so the vulnerability will be reported just as urgently as every other one on your list.

Having the ability to actually run exploits against vulnerable devices as part of a security assurance process can provide perspective on what is really at risk, versus just theoretically vulnerable. In an integrated scenario a discovered vulnerability can be tested for exploit immediately, to either shorten the window of exposure or provide immediate reassurance.

Of course there is risk with this approach, including the possibility of taking down production devices, so use pen testing tools with care. But to really know what can be exploited and what can’t you need to use live ammunition. And be sure to use fully vetted, professionally supported exploit code. You should have a real quality assurance process behind the exploits you try. It’s cool to have an open source exploit, and on a test/analysis network using less stable code that’s fine. But you probably don’t want to launch an untested exploit against a production system. Not if you like your job, anyway.

Compliance Automation

In the rush to get back to our security roots, many folks have forgotten that the auditor is still going to show up every month/quarter/year and you need to be ready. That process burns resources that could otherwise be used on more strategic efforts, just like everything else. Vulnerability scanning is a critical part of every compliance mandate, so scanners have pumped out PCI, HIPAA, SOX, NERC/FERC, etc. reports for years. But that’s only the first step in compliance automation. Auditors need plenty of other data to determine whether your control set is sufficient to satisfy the regulation. That includes things like configuration files, log records, and self-assessment questionnaires.

We expect you to see increasingly robust compliance automation in these platforms over time. That means a workflow engine to help you manage getting ready for your assessment. It means a flexible integration model to allow storage of additional unstructured data in the system. The goal is to ensure that when the auditor shows up your folks have already assembled all the data they need and can easily access it. The easier that is the sooner the auditor will go away and lets your folks get back to work.

Patch/Configuration Management

Finally, you don’t have to stretch to see the value of broader configuration and/or patch management capabilities within the vulnerability management platform. You are already finding what’s wrong (either vulnerable or improperly configured), so why not just fix it? Clearly there is plenty of overlap with existing configuration and patching tools, and you could just as easily make the case that those tools can and should add vulnerability management functions. Regardless of which platform ultimately handles vulnerability and patch/configuration, there are clear advantages to having a common environment.

There are also clear disadvantages to integrating finding and fixing these issues, mostly concerning separation of duties. Your auditors may have an issue with the same platform being used to figure out what’s wrong and then to fix it, but that’s a point of discussion for your next assessment. Clearly in terms of leverage and efficiency, the ability to find and fix problems in a single same environment is attractive to understaffed shops (and who has resources to burn out there?) and folks who need to improve efficiency (everyone).

Benchmarking

We are big fans of benchmarking your environment against other organizations of similar size and industry to figure out where you stand. In fact, we wrote a paper last year on the topic. But compared to the other value-add capabilities we have already described, our research indicates that benchmarking isn’t the highest priority item. We still find great value in comparing configurations / patch windows / malware infections / any other metrics you gather against other companies, and we this should be another data point to help determine which actions need to be taken right now. But for the time being Mr. Market says this capability is a bit further off.

We understand that some of these capabilities already exist within your existing IT management stacks, so a vulnerability management platform should cleanly integrate with those existing functions. In fact, we will discuss those logical integration points next. But for now suffice it to say that smaller organizations (and enterprises acting like small organizations) may favor leveraging a single platform to provide a closed-loop ability to find problems and fix them without having to deal with multiple systems. So over time it’s quite possibile that vulnerability management platforms will evolve into a broader IT ops platform – offering deeper asset management, performance management, the aforementioned configuration/patch capabilities, and other more traditional IT Ops functions.

Will the evolved vulnerability management platform ever really get to this point, of being everything to everyone? Probably not – the natural order of the IT business means only one, or maybe two of the current players are likely to remain stand-alone entities. The rest will likely be acquired by larger providers and integrated into the acquirer’s stack, or they will go away altogether.

But regardless of how much stuff is built into the vulnerability management platform, organizations are unlikely to move all their technologies into this platform in a flash cut. So for the foreseeable future these platforms need to play nicely with other management technologies. In the next post we will tackle the integration points with these systems, and define a set of interfaces and standards that need to be supported by the platform.

- Mike Rothman

(0) Comments